Here, we're going to demonstrate the significant speed differences we might encounter when inserting into a SQLite database.

Let's dive in!

A little setup and prep

Similar to the blog referenced above, we're going to use the following:

• PowerShell

• SQLite (we're going to continue to use a PDQ Inventory database)

• A CSV file full of Product Key info (real or contrived)

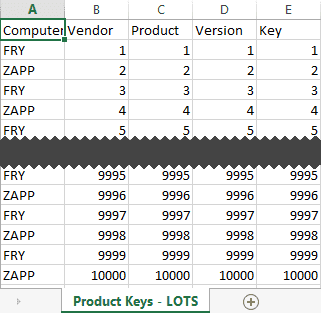

In our case, we're going to reuse our previous (contrived) CSV file:

Since we are going to be poking around with our PDQ Inventory database, I'm going to highly recommend a backup (steps to backup a database).

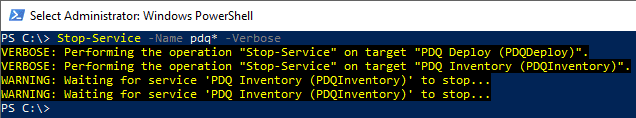

Additionally, since we are going to be working directly with our PDQ Inventory database, we're going to close any open PDQ Deploy or PDQ Inventory consoles and stop their respective services.

Wave a little PowerShell and we'll be good to go.

Stop-Service -Name pdq* -Verbose

Carry on!

Improving bulk insert speed in SQLite

Here, I'm hoping that we'll be able to see the importance of batching queries together into fewer transactions in order to improve the speed of our inserts.

Let's break this down into the two types of examples we're going to look at:

1. Multiple transactions - slow and boring.

a. Query and insert each item as we iterate through the file

2. Single transaction - fast (and possibly furious).

a. Batching multiple queries into a single transaction

The first option (multiple transactions) seems to be what most people are tempted to do, especially if you're doing ad-hoc queries against the database. For small amounts of data, it isn't likely to matter too much.

With large datasets, however, you may wish to group your queries into as few transactions as possible.

Why does it matter, you ask?

Science, mostly. Science and fast inserts into SQLite. Check out this link for more detailed info on why we want to consolidate our queries into as few transactions as possible - (read here for more info on why Insert can be slow).

Enough talk! Let's party!

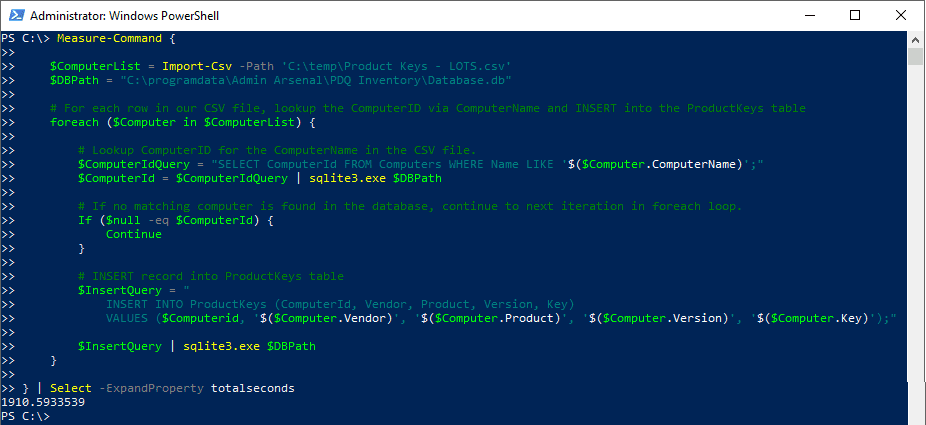

Example #1 - Query and Insert as we go

With this option, we are going to query and update each record as we iterate through each row in our CSV file (10,000 records). (See Import-Csv)

$ComputerList = Import-Csv -Path 'C:\temp\Product Keys - LOTS.csv'

$DBPath = "C:\programdata\Admin Arsenal\PDQ Inventory\Database.db"

# For each row in our CSV file, lookup the ComputerID via ComputerName and INSERT into the

ProductKeys table

foreach ($Computer in $ComputerList) {

# Lookup ComputerID for the ComputerName in the CSV file.

$ComputerIdQuery = "SELECT ComputerId FROM Computers WHERE Name LIKE

'$($Computer.ComputerName)';"

$ComputerId = $ComputerIdQuery | sqlite3.exe $DBPath

# If no matching computer is found in the database, continue to next iteration in foreach loop.

If ($null -eq $ComputerId) {

Continue

}

# INSERT record into ProductKeys table

$InsertQuery = "

INSERT INTO ProductKeys (ComputerId, Vendor, Product, Version, Key)

VALUES ($Computerid, '$($Computer.Vendor)',

'$($Computer.Product)', '$($Computer.Version)', '$($Computer.Key)');"

$InsertQuery | sqlite3.exe $DBPath

}

1910 seconds! Gah! That's over 30 minutes!

I'm pretty sure that molasses runs faster than that on a cold winter morning. (I'm not going to validate that, however…)

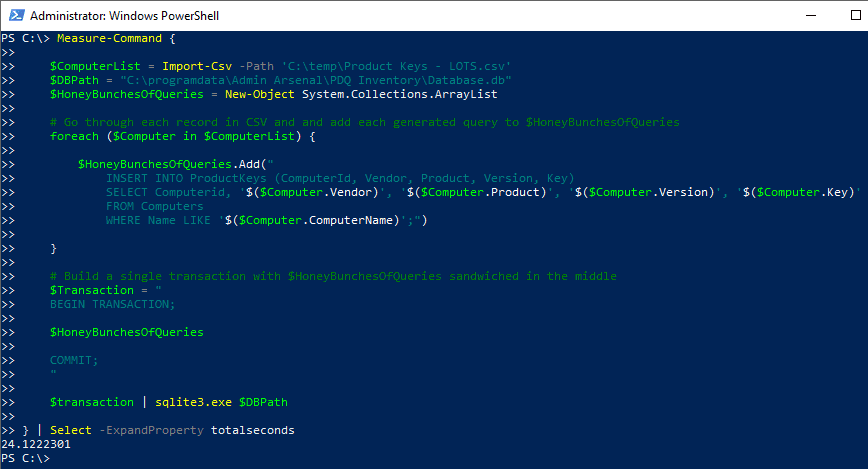

Example #2 - Batch all queries into a single transaction

In order to get all of our queries into a single transaction, we're going to do the following:

1. Import a CSV file ($ComputerList)

2. Create an empty array ($HoneyBunchesOfQueries)

3. Loop through our list of computers and build individual queries and add them to our array (the foreach section)

4. Sandwich the array values inside of a SQLite transaction ($Transaction)

5. Run that transaction against our database ($DBPath)

$ComputerList = Import-Csv -Path 'C:\temp\Product Keys - LOTS.csv'

$DBPath = "C:\programdata\Admin Arsenal\PDQ Inventory\Database.db"

$HoneyBunchesOfQueries = New-Object System.Collections.ArrayList

# Go through each record in CSV and add each generated query to $HoneyBunchesOfQueries

foreach ($Computer in $ComputerList) {

$HoneyBunchesOfQueries.Add("

INSERT INTO ProductKeys (ComputerId, Vendor, Product, Version, Key)

SELECT Computerid, '$($Computer.Vendor)', '$($Computer.Product)','$($Computer.Version)', '$($Computer.Key)'

FROM Computers

WHERE Name LIKE '$($Computer.ComputerName)';")

}

# Build a single transaction with $HoneyBunchesOfQueries sandwiched in the middle

$Transaction = "

BEGIN TRANSACTION;

$HoneyBunchesOfQueries

COMMIT;

"

$transaction | sqlite3.exe $DBPath

24 seconds!

This script had the same outcome as the previous script but didn't take over 30 minutes to complete!

Wrapping up

Well, that's about it!

I believe that we have a clear winner unless you enjoy tasks taking orders of magnitude longer than they need to.

Cheers and Happy PowerShelling!