An AI acceptable use policy defines how employees can safely and responsibly use AI systems at work. This template helps you set clear, ethical, and compliant guidelines for users within their organizations.

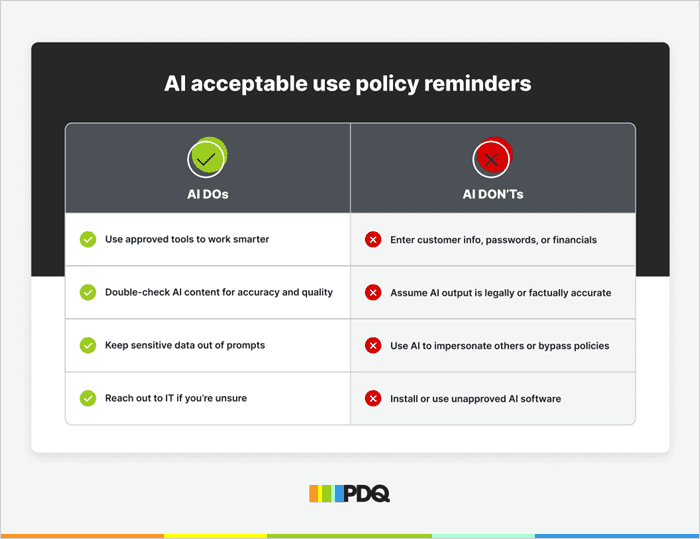

Quick reminders poster (download or display)

Post this in a place where employees will see it, like near desks. Alternatively, include it in onboarding kits as a handy cheat sheet for users on how to use AI responsibly.

How to use this template

This template helps organizations build an AI acceptable use policy tailored to your environment. It isn’t copy-and-paste — instead, it prompts teams to define risks, goals, and responsibilities.

Use it to:

Help employees understand what’s smart vs. risky AI behavior

Draw clear lines for security and compliance

Make ownership and accountability easy to follow

Get buy-in from stakeholders across departments

Keep endpoints patched & secure

Deploy custom or prebuilt software packages, automate maintenance tasks, and secure your Windows devices — no matter where they are.

What are best practices for writing an AI acceptable use policy?

These tips can help you write an IT policy that’s the perfect trifecta: clear, effective, and easy to follow.

Be specific but flexible.

Define clear expectations; however, leave room for change as tools and risks evolve. You can’t predict every future use case.Assign clear ownership.

Designate a policy owner (for example, IT, security, legal) and review schedule. AI moves quickly, so your policy also needs to.Think cross-functionally.

Involve legal, HR, security, and department leads when drafting. You’ll get a more comprehensive perspective and better buy-in.Avoid one-size-fits-all rules.

Not all departments use AI the same way. Tailor guidance to fit different roles (e.g., developers vs. HR vs. marketing).Start with education, not enforcement.

Lead with a learning mindset. Many employees use AI casually without realizing the risks. Your policy should help them get smarter about how they use it, not just say “no.”Be transparent about intent.

Explain why the policy exists — to protect data, stay compliant, and empower smart use. It's easier for people to follow rules they understand.Focus on real-world examples.

Include use cases that reflect how your team works. Show how they can and can’t use AI and why. That’s what sticks.

Policy planning checklist

Before you write your policy, these steps can help you prepare to draft the best possible documentation:

☐ Audit your organization’s current AI usage

☐ Identify which departments currently use AI tools (marketing, dev, HR, etc.)

☐ Dig into the applicable regulations (e.g., GDPR, HIPAA)

☐ Consult any legal, security, HR, and executive stakeholders

☐ Define the business goals and boundaries for AI use

AI acceptable use policy framework

Use this section to fill in your organization’s AI usage policies, concerns, and standards.

1. Policy overview & purpose

This section lays out how your team should responsibly and securely use AI technology at work. The goal is to encourage smart use while protecting your people, data, and reputation.

Fill in:

☐ What goals does your company want to accomplish by using AI?

☐ What AI risks do you want to avoid (e.g., data leakage, hallucinations, bias)?

☐ Who does the policy apply to (employees, contractors, third-party vendors)?

2. Acceptable AI tools & use cases

Not all AI tools (or uses) are created equal. This section helps clarify what your org supports, what’s off-limits, and where to draw the line.

Fill in:

☐ Approved AI tools (e.g., ChatGPT/OpenAI, Microsoft Copilot, GitHub Copilot)

☐ Banned or restricted AI tools (and why)

☐ Examples of permitted use (e.g., drafting emails, summarizing public documents)

☐ Examples of prohibited use (e.g., sharing sensitive data, auto-generating contracts)

3. Data privacy & confidentiality guidelines

AI tools can be helpful — but only if they’re used with care. This section helps your team understand what data is off-limits, what classifications matter, and how to stay compliant while still getting value from AI.

Fill in:

☐ What types of data are prohibited from being input into AI tools?

☐ What data classification levels (public, internal, confidential, restricted) apply?

☐ Which compliance standards must AI use follow (e.g., SOC 2, ISO 27001)?

☐ Is output from AI tools considered proprietary company data?

4. Employee responsibilities

Using smart tools effectively means understanding your role. This section spells out what’s expected of employees when it comes to verifying content, protecting data, and following internal guidelines.

Fill in:

☐ Expectations around verifying AI-generated content

☐ Guidelines for citing AI use in work products

☐ Reporting procedures for misuse or data exposure

☐ Any mandatory training, activities, or annual refreshers

5. Security & access control

Managing who can use AI — and how — is key to reducing risk. This section helps define how tools are approved, monitored, and secured across the organization.

Fill in:

☐ Who is authorized to approve new AI tools?

☐ How is tool usage tracked and logged?

☐ Are there restrictions or special rules for remote workers or personal devices?

6. Bias, ethics & fair use

AI isn’t neutral, and how you use it matters. This section helps ensure your organization uses AI in ways that are fair, inclusive, and aligned with your company values.

Fill in:

☐ Guidelines to prevent biased or discriminatory AI output (e.g., audit training data for demographic representation, restrict the use of AI-generated content that perpetuates stereotypes or discriminatory assumptions, establish review processes, etc.)

☐ Rules for using AI in hiring, performance reviews, or customer interactions

☐ Policies around AI-generated creative content (IP rights, attribution, etc.)

7. Policy review & updates

AI moves fast. Your policy should too. Keep this policy relevant by revisiting it regularly and assigning clear ownership.

Fill in:

☐ How often will this policy be reviewed?

☐ Who owns the policy (IT? Legal? Security?)

☐ How will changes be communicated to employees?

Supporting materials (optional)

Include any supporting materials for additional clarity and convenience:

AI tool request forms

Security training or information refresher modules

Internal data classification guidelines

Glossary of AI terms for employees