Time to upgrade to the new PDQ Inventory 9. Yes, there are new features. Read on to see what’s new in this version. If your PDQ Inventory license is current, you can get these upgrades at no extra charge.

What’s new in PDQ Inventory 9

Network discovery

Add computers to PDQ Inventory with the network discovery tool. This handy new feature will scan supplied IP ranges and add the discovered devices to your PDQ Inventory database. This feature does require a current PDQ Inventory license. Simply to go Add Computers > Network Discovery.

This will bring up the Network Discovery window that will allow you to specify a Subnet or IP addresses or IP address ranges to find computers. Click Start Discovery to begin adding computers and devices to PDQ Inventory, which will open the Network Discovery Status window to allow you to see the progress.

Automatic backups

Now your PDQ Inventory database is automatically backed up. These settings can be found under File > Preferences > Database. The image below shows the default setting for backups. Change them up as you see fit to what best suits you. You also have the option to run a back up at any time by clicking Backup Now. These backups do count against your set number of backups kept, and the oldest backup will be deleted to maintain the number of backups as set.

Feature improvements

Registry scanner improvements

PDQ Inventory 9 introduces wildcards for use in creating registry scanners (these are similar to the changes introduced in the File Scanner back in version 8). Add a registry scanner in File > Preferences > Scan Profiles. Click New and in the Scan Profile: New Scan Profile window, select Add > Registry.

Available wildcards are listed for your reference. For example the Registry scanner below scans all subkeys and values under HKEY_LOCAL_MACHINE\SOFTWARE\Google\Update\ClientState. The data collected can be used to determine if the 32 or 64-bit version of Chrome is installed.

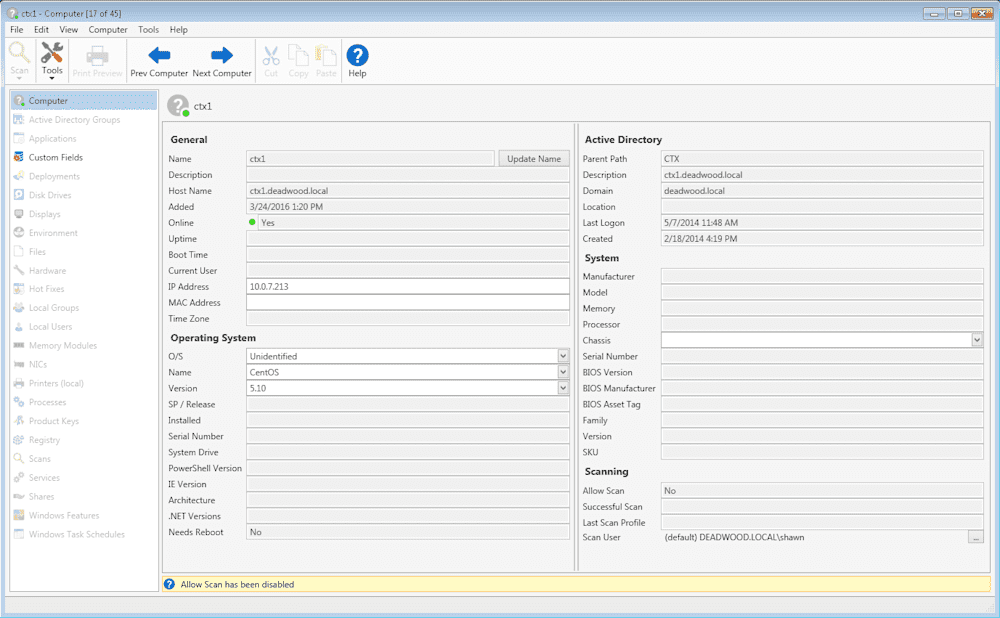

Edit IP address

In PDQ Inventory 8 the ability to add non-Windows devices was added to help you better keep track of various devices that cannot be scanned like your Windows computers. In this new release, you can now add or edit the IP address listed for these devices. To edit fields for these items (that have Allow Scan disabled), simply double click on the device listed in the PDQ Inventory console. Fields that are white can be edited.

Adding Product Keys Save product key information in PDQ Inventory. Double-click on a computer and select Product Keys from the left pane to enter in keys.