Have you ever been expected to magically manage your environment without the correct permissions to do so? This was me in my previous job. I was required to keep a multitude of applications up to date that required reboots and yet I wasn't allowed to force reboots. Instead, I was told to send out a weekly email on Fridays asking users to restart their machine. Guess how many users actually followed my instructions? About -50 (my optimistic estimate). That just wasn't good enough for me. So I did what I always do, I automated my problem away using PDQ products and a simple PowerShell script.

Check out our article on restart vs. shutdown where we compare the two boot options.

Need to keep your users informed about reboots? Learn how to use the BurntToast module to display notifications with PowerShell.

PDQ Inventory makes life easy

PDQ Inventory has an amazing underutilized feature, dynamic collections. With these collections, you can literally view a list of computers based on almost anything you can think of: installed applications, IP Addresses, MAC Addresses, installed memory, chassis type, registry information. There are even tons of pre-built collections ready to go. For this example, we're going to use the pre-built collection 'Reboot Required'. This collection lists all scanned computers that need a reboot from items such as Windows Updates, application installations, file renaming, and cleanup.

So how do we use this to our benefit? It's actually pretty easy. PDQ Deploy can deploy an application or script based on these collections to those computers. So all we need to do is set up a schedule within PDQ Deploy that will deploy a script to remind users to reboot their machine if they haven't done so.

Why use a script?

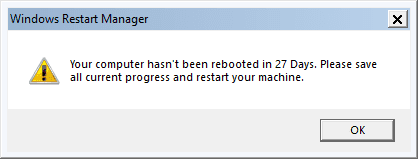

Why use a script instead of a message step within Deploy? By using a PowerShell script I can make a pop-up look like it’s coming from Windows. When users think this message is coming from Windows they’re more willing to pay attention. Another benefit is the ability to add dynamic information within the message. For instance, I can query WMI to see how long it’s been since someone has rebooted their machine and include that data within the pop-up message. Lastly, I can add conditions based off of that information such as not showing the message unless it’s been more than six days.

Simple pop-up message

First, we add the Windows Presentation Framework in case it’s required by the target machine. It’s a simple one-liner.

# Add Windows Presentation Framework

Add-Type -AssemblyName PresentationFrameworkNext, we query WMI to see how long it’s been since the target has been rebooted, and in this case, look at the uptime in days. (see Get-Date)

# Calculate UpTime in Days

$WMI = Get-WmiObject win32_operatingsystem

$UpTime = (Get-Date) - ($WMI.ConvertToDateTime($WMI.LastBootUpTime))

$UptimeDays = $Uptime.DaysI then added an if condition to only continue to the message box if it’s been more than six days. I want to inform my users, but I don’t want to annoy them too much. (If Uptime in days is greater than 6 create a dynamic variable we can add to the message later, if not stop the script.)

if ($UptimeDays -gt "6") {

$Output = "$UptimeDays Days"

} else {

Exit 0

}Lastly, I create the message box and assign a value to each option so that I can easily re-use this script later. In this case, I only want the user to acknowledge the message so I add a warning icon and an OK button.

# Message Box Options

$Title = "Windows Restart Manager"

$Body = "Your computer hasn't been rebooted in $Output. Please save all current progress and restart your machine."

$Icon = "Warning"

$Button = "OK"

# Show Message Box

[System.Windows.MessageBox]::Show("$Body","$Title","$Button","$Icon")Here is the full script and an example of the pop-up.

# Add Windows Presentation Framework

Add-Type -AssemblyName PresentationFramework

# Calculate UpTime in Days

$WMI = Get-WmiObject win32_operatingsystem

$UpTime = (Get-Date) - ($WMI.ConvertToDateTime($WMI.LastBootUpTime))

$UptimeDays = $Uptime.Days

if ($UptimeDays -gt "6") {

$Output = "$UptimeDays Days"

} else {

Exit 0

}

# Message Box Options

$Title = "Windows Restart Manager"

$Body = "Your computer hasn't been rebooted in $Output. Please save all current progress and restart your machine."

$Icon = "Warning"

$Button = "OK"

# Show Message Box

[System.Windows.MessageBox]::Show("$Body","$Title","$Button","$Icon")

PDQ Deploy schedule

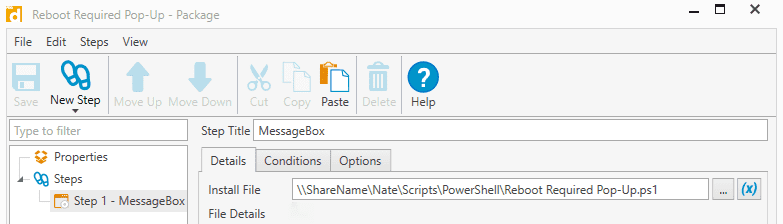

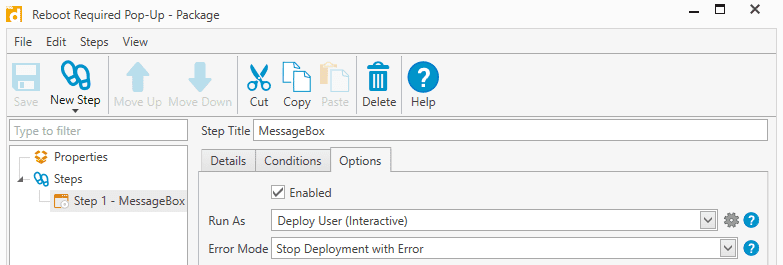

Now I can add this script into a step within Deploy. In this specific case, I’ve added the script from a network share within an install step so that I can easily edit this script on the fly if necessary.

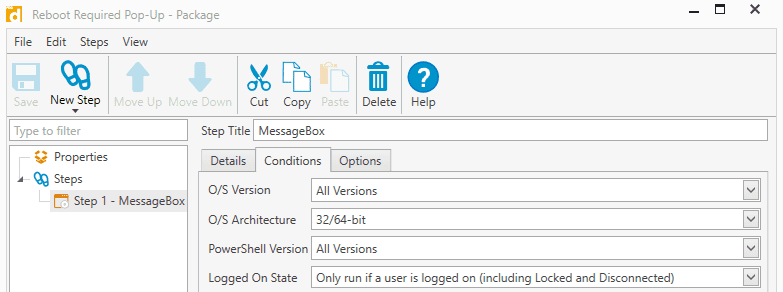

I’ve changed the Logged On State to ‘Only run if a user is logged on (including Locked and Disconnected)’ to reduce unnecessary deployments.

My last change is selecting ‘Deploy User (Interactive)’ so that my message is seen by the user but still runs using the default Deploy credentials.

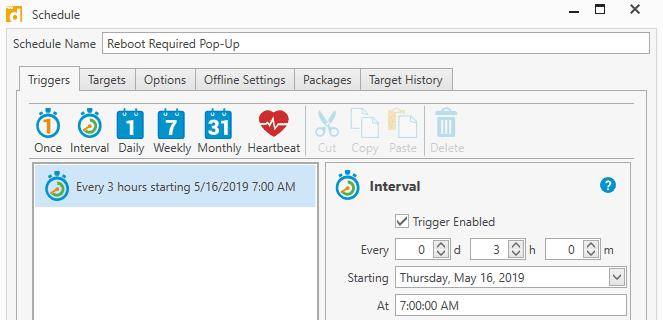

All that’s left is adding this package into a schedule. I’ve selected an interval of three hours so that most users won’t see this more than twice a day.

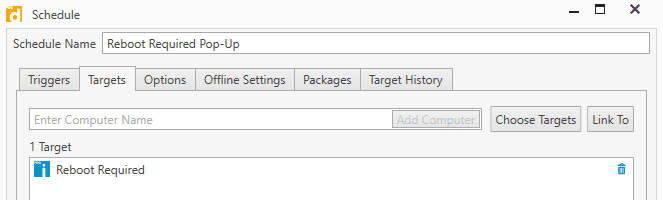

I’ve linked this schedule to the ‘Reboot Required’ collection. Now any target that meets those conditions will be deployed to.

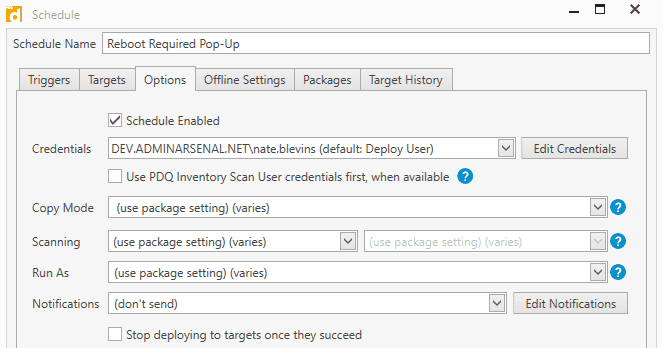

It’s important in this case to uncheck the ‘Stop deploying to targets once they succeed’ option as the message would only show for the user once otherwise. Attach the package to the schedule and you’re ready to go!

Wrapping up

Now your users will help you do your job more effectively and you don’t even have to think about it. You can utilize PDQ Inventory to narrow down computers with specific criteria then use Deploy to take action. Automate Windows Cumulative Updates, baseline applications for your images, and patch specific vulnerabilities. If you’re manually doing the same task over and over again you’re doing it wrong.